Multi Modal Human Action Dataset

Written on June 15th, 2018 by Dan Peluso

During my first year at Northeastern University I participated in research at Raymond Fu’s SMILE Lab, where I worked directly under Lichen Wang.

First I completed work on maintaining the lab’s main website, which was a WordPress with many plugins to display the current research and achievements of the lab.

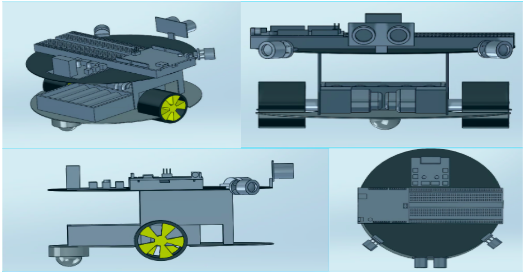

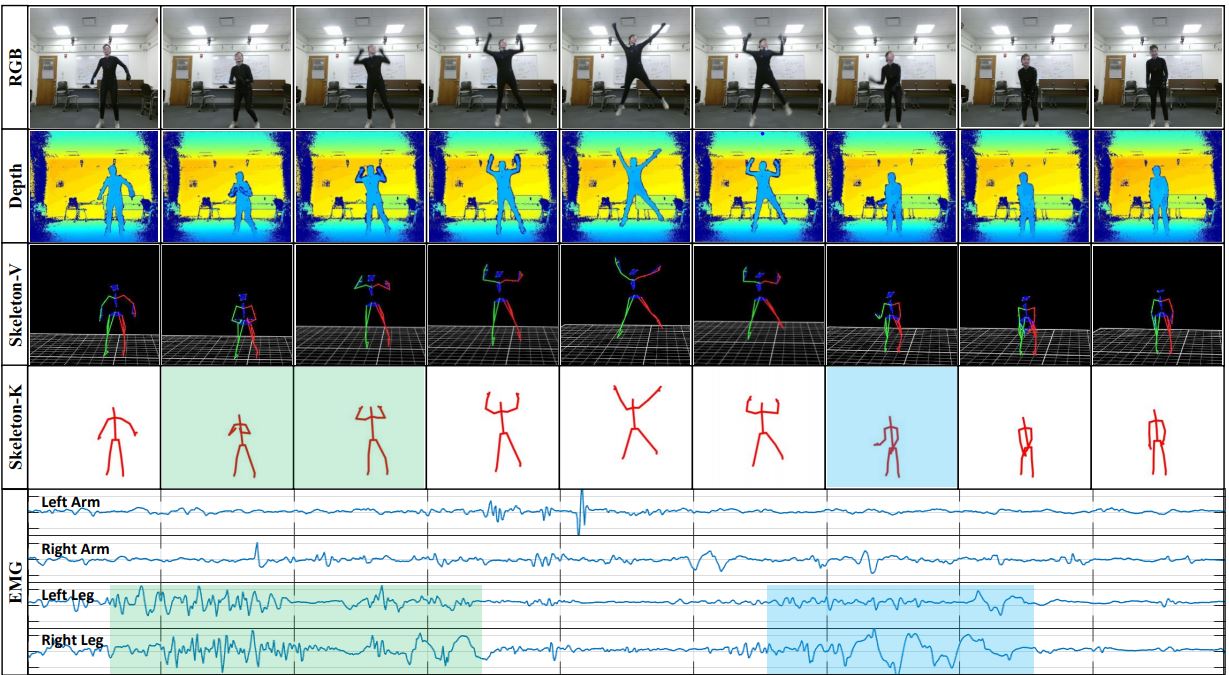

My next work was with data labeling and collection. This is when I moved over to my main project, the Multi Modal Human Action Dataset. The labeling was done using a custom MATLAB program to quickly move through frames and label the actions according to the video feed. These labels were used in the training and testing of the dataset using state-of-the-art models (see paper below). I even collected some of my own data!

The summer of 2018 I moved in a more skilled position at SMILE lab, where I tuned hyperparameters for the various models we were testing on our dataset. My responsibilities here were to learn the models my director wanted to add to the paper, and run MATLAB scripts to automate different hyperparameter combinations. This work was computed in the school’s discovery cluster, which was available through SSH and lab credentials.

Paper

Skills

- WordPress

- HTML / CSS

- MATLAB

- Linux file transfer and shell systems

- Deep Learning

- Machine Learning

- Feature extraction

- CNN

- Data collection

- Cluster use for heavy computing